Hand Gesture Interface for Medical Visualization Applications (Gestix)

Introduction. Computer information technology is penetrating ever deeper into the hospital environment. It is important that such technology be used safely to avoid serious mistakes leading to possible fatal incidents. Keyboards and mice are today’s principle method of human – computer interaction.

|

|

Figure 1

|

It has been found that computer keyboards and mice are the most common sources of cross-contamination and spreading infections in intensive care units (ICUs) today. Introducing a more natural human computer interaction (HCI) will have a positive impact in today's hospital environment. The basis of forms of human-human communication are speech, gesture and body language (including facial expression, hand and body gestures and retinal/iris tracking).

We are currently exploring only the use of hand gestures. In the future we expect to further enhance these capabilities using other modalities. A video-based gesture capture system to manipulate windows and objects within a graphical user interface (GUI) is proffered.

|

|

Figure 2

|

The real time operation of the gesture interface is currently being tested in a hospital environment. In this domain the non-contact aspect of the gesture interface avoids the problem of possible transfer of contagious diseases through the traditional keyboard/mouse user interface.

System Overview. A web-camera placed above the screen (Figure 3a) captures a sequence of images like those shown in Figure 3b. The hand is segmented using color-motion fusion, B&W threshold and various morphological image processing operations.

|

|

|

|

Figure 3

|

|

|

|

Figure 4

|

The location of the hand in each image is represented by the 2D coordinates of its centroid; and mapped into one of eight possible navigational directions to position the cursor of a virtual mouse. The motion of the hand is interpreted by a tracking module. At certain points in the interaction it becomes necessary to classify the pose of the hand. Then the image is cropped tightly around the blob of the hand and a more accurate segmentation is performed.

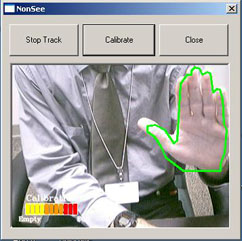

Segmentation. When initiating the hand gesture interface in real-time, the user must first ‘calibrate’ the system. The interface preview window shows an outline of the palm of the hand gesture drawn on the screen. The user places his hand within the template while a skin-color model is built (Figure 3), after which the tracking module is triggered to follow the hand.

|

|

|

|

Figure 5

|

|

|

|

|

|

|

Figure 6

|

|

Hand Tracking. We classify hand gestures using a simple, finite-state machine. When the physician wishes to move the cursor across the screen, he moves his hand out of the ‘neutral area’ to any of the 8 naviagational quadrants. The interaction is designed in this way since the physician will often have his hands in the ‘neutral area’ without intending to control the cursor. When the moves to one of the 8 navigational quadrants, the cursor moves in the requested direction.

To facilitate positioning, we map hand motion to curser movement. Small, slow hand (large, fast) motions cause small (large) cursor position changes. In this manner the user can precisely control cursor alignment.

Current Work. We are interested on extending the navigation system to work with gestures to perform different commands, such as "click", "double-click", "drag", etc. The present challenge is to segment the hand with a bounding accurate window about it.. This is achieved by a combination of the tracking module with blob analysis of the image in BW. Some examples of the segmented gestures.

|

|

|

|

|

|

|

|

|

Figure 7

|

|||

Demos (download with right click, and pick "Save Target As...")

|

10/9/2004 1:43 / 3.7 MB |

|

|

Gesture Recognition

|

11/14/2004 2:19 / 4.6 MB |

| Gesture Recognition with virtual buttons |

06/10/2005 :32 / 1.3 MB |

|

| Both hands are tracked with different colors |

08/29/2005 :03 / 193 kb |

|

| Recognizing the 'letter A' in American sign language |

08/15/2005 :17 / 1.8 MB |

|

| Hand Gestures Control Display of Patient Data |

8/31/2005 :41 / 2.6 MB |

|

| Image Rotation via Manipulation of a Pencil (Scalpel) |

2/16/2006 1:13 / 4.3 MB |

|

| Live Demonstration During Neurosurgical Procedure

(Short version) |

08/01/2006 0:27 / 3.9 MB |

|

|

|

Live Demonstration During Neurosurgical Procedure

(Long version) |

08/01/2006 2:58 / 28.8 MB |

Papers:

- J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith, and J. Handler.“A hand gesture sterile tool for browsing MRI images in the OR”. Journal of the American Medical Informatics Association. 2008. Volume 15, Issue 3.

- J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M.

Smith, and J. Handler. “A Real-Time Hand Gesture Interface for a

Medical Image Guided System”. International Journal of Intelligent

Computing in Medical Sciences and Image Processing. Accepted.

- J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith, J. Handler. “Gestix: A Doctor-Computer Sterile Gesture Interface for Dynamic Environments”. Soft Computing in Industrial Applications. Recent and Emerging Methods and Techniques Series: Advances in Soft Computing, Vol. 39 Saad, A.; Avineri, E.; Dahal, K.; Sarfraz, M.; Roy, R. (Eds.) 2007, pp. 30-39.

- J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith and J. Handler

"A Real-Time Hand Gesture System Based on Evolutionary Search"

Vision (v22, n3, 2006),

Society of Manufacturing Engineers. Dearborn, Mich.

( PDF, 470 kb)

PDF, 470 kb) - J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith and J. Handler

"Gestix: A Doctor-Computer Sterile Gesture Interface for Dynamic Environments",

11th Online World Conference on Soft Computing in Industrial Applications

September 18th - October 6th, 2006, on the World Wide Web

( PDF, 330 kb)

PDF, 330 kb) - J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith and J. Handler

"A Real-Time Hand Gesture Interface for a Medical Image Guided System",

Ninth Israeli Symposium on Computer-Aided Surgery,

Medical Robotics, and Medical Imaging,

ISRACAS 2006, May 11, 2006,

Petach Tikva, Israel

( PDF, 300 kb)

PDF, 300 kb) - J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith and J. Handler

"A Real-Time Hand Gesture Interface for Medical Visualization Applications,"

In the 10th Online World Conference on Soft Computing in Industrial Applications, September 19th - October 7th, 2005.

( PDF, 481 kb)

PDF, 481 kb)

- J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith and J. Handler.

"A Real-Time Hand Gesture System based on Evolutionary Search,"

Genetic and Evolutionary Computation Conference, (GECCO 2005)

June, 2005,

Washington DC, USA

( PDF, 316 kb )

PDF, 316 kb )

Future Steps:

- Embed Gesture Tracking into Azyxxi System (iminent)

- Add a robust Hand Gesture Classification Module

- Add both hands control (dynamic gesture capability)

- Browse X-rays using the hand gesture system in the OR

Research Fellow:

- Juan Wachs, PhD [1]

Scientific Advisors:

- Helman Stern, Prof [1]

- Yael Edan, Prof [1]

- Michael Gillam, MD [2] (gillam at gmail.com)

- Craig Feied, MD [2] (cfeied at ncemi.org)

- Mark Smith, MD [2]

- Jon Handler, MD [2]

Institutions:

[1] Dept. of Industrial Engineering and Management,

Intelligent Systems,

Ben-Gurion University of the Negev, Israel[2] Institute for Medical Informatics,

Washington Hospital Center,

Washington, DC.