EAS 657

Geophysical Inverse Theory

Robert L. Nowack

Lecture 3

Linear Vector Spaces and Subspaces

In this section, a brief review is given of linear vector spaces.

A vector space V is defined by

a) A collection of elements called “vectors”.

b) A field of scalars, F.

A linear vector space has the following properties

vector addition: ![]()

closure: if v1, v2, ![]() V, then v1 + v2

V, then v1 + v2 ![]() V

V

commutative: v1 + v2 = v2 + v1

unique zero: 0 such that if v ![]() V,

then v + 0 = v

V,

then v + 0 = v

unique inverse: -v such that if v ![]() V,

then v + (-v) = 0

V,

then v + (-v) = 0

scalar multiplication:

![]() v

v

closure ![]() if v

if v ![]() V,

V, ![]()

![]() F, then

F, then ![]() v

v ![]() V

V

linearity:

Ex) n-tuples

Rn = real elements or Cn = complex elements

Ex) An infinite sequence of real numbers. We will be interested in bounded sequences for which {xk} < M for all k where M is some constant.

![]()

Ex) Consider the interval C[a,b] on the real line. The collection of all continuous real valued functions f(t) on this interval is a linear vector space. The zero vector is the function identically zero on [a,b].

Ex) The collection of polynomial functions on the interval [a,b] is a linear vector space.

Subspaces: The subspace V1 of V contains a subset of V and is also a vector space.

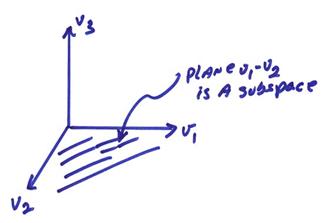

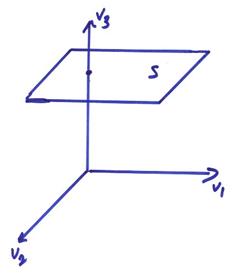

Ex)

The plane v1-v2 is a subspace ![]()

Ex)

Is S a subspace? No, it doesn’t include the zero vector! But

S

= V¢

+ ![]()

The space S is nonetheless very important and is called a hyperplane.

Ex)

is a subspace of Rn.

Def: A set of vectors v1, … vN ![]() V are linearly dependent iff

(if and only if) there exists a set of scalars,

V are linearly dependent iff

(if and only if) there exists a set of scalars, ![]() , not all zero, such that

, not all zero, such that

![]()

Otherwise the set of vectors are called linearly independent.

Ex)

![]()

2 v1 + 1 v2 = 0

Thus, these 2 vectors are linearly dependent.

Ex)

![]()

This equation only has the trivial solution

Thus v1 and v2 are linearly independent.

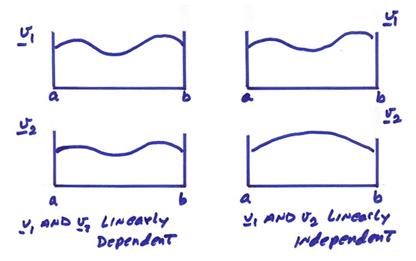

Ex) The function space C[a,b] of continuous functions on [a,b].

In the figure below, the functions on the left are linearly dependent and the functions on the right are linearly independent.

A vector v can be written in terms of v1 … vN

if there exists scalars, ![]() , such that

, such that

![]()

A set of

vectors spans a subspace ![]() if all the vectors in

if all the vectors in ![]() can be expressed in

terms of this set of vectors.

can be expressed in

terms of this set of vectors.

Ex) In the figure below, vectors v1,v2 span the v1 - v2 plane, but v1,x also span the n1 - n2 plane.

A Basis is a linearly independent set of vectors which span the vector space.

Ex) The standard basis for RN is

Note that this basis is not unique. However, every basis for a given vector space has the same number of vectors.

Theorem: Every basis of a given vector space have the number of basis vectors or dimension.

Theorem: A vector space having a finite basis is said to be finite dimensional. All other vector spaces are said to be infinite dimensional.

Theorem: Given a basis v1, … vN

for a vector space v, if ![]() , then

, then ![]() .

.

Proof: ![]()

But because

vi are independent,

then ![]() .

.

If u1, …,

u1, …,

A vector space can be decomposed into independent subspaces instead of vectors. Thus,

V = V1 + V2 + V3 … Vm

This is called a direct sum decomposition. It is like a basis decomposition when Vi all have dimension 1. For a basis v1,v2 … vN, then

V = v1 + v2 + … + vN

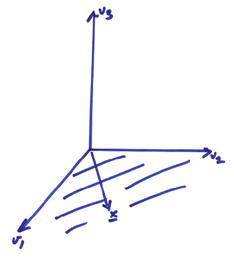

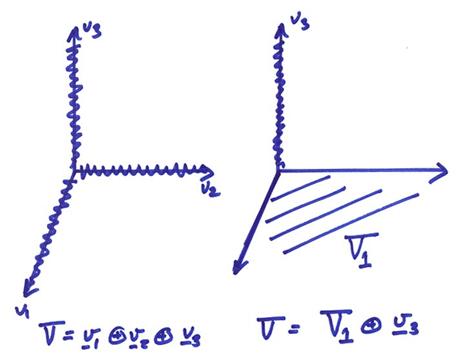

Ex) In the figure below, the vector space v can be written as a direct sum decomposition as

V = v1 + v2 + v3

or

V = V1 + v3

If V = U + W, then Dim V = Dim U + Dim V where U

![]() W = {0}.

W = {0}.

The Concept of Length

A vector space with a defined

measure of length is called a Normed Vector Space. This adds a great deal more structure to a

vector space. A normed

vector space has a defined mapping from V ![]() R1 noted as || v

|| satisfying

R1 noted as || v

|| satisfying

1) || v

|| > 0 for all v ![]() V || v || = 0 only if v = 0

V || v || = 0 only if v = 0

2) || ![]() v || = |

v || = | ![]() | || v ||

| || v ||

3) || v1 + v2 || < || v1 || + || v2 ||

The length

measure can also be used to measure the “Distance” between two vectors. For V =

RN, then different length measures or norms are possible.

![]()

![]()

Ex) V = C [a,b]

![]()

The distance between two vectors can be written in terms of the norm as

d = || x - y ||p

For finite dimensional spaces there exist C1,C2, such that

C1 || v ||A < || v

||B <

C2 || v ||A

From this, proofs of convergence with one norm can be transferred to other norms.

A complete normed vector space is called a Banach space. A metric space is complete if every Cauchy sequence in the space converges to some point v in the vector space V.

A sequence {vN} contained

in a normed vector space (V,d) is called a Cauchy

sequence if for every ![]() > 0 there exists an

integer

> 0 there exists an

integer ![]() such that if

such that if ![]() , then d(vm1,vm2) <

, then d(vm1,vm2) < ![]() .

.

Ex) The rational numbers are not complete on the real line V = R1,d(x,y) = | x-y | where a rational number is a set of all fractions of integers equivalent to some fixed fraction, .5 = 1/2 = 2/4 = 6/12 … The rational numbers are incomplete because imbedded within the rationals are the “irrational numbers” which are nonperiodic infinite decimals.

Ex) ![]() ,

, ![]() are irrational numbers.

are irrational numbers.

Truncated irrationals are a sequence of rationals converging in the limit to the given irrational number.

Real numbers (rational + irrationals) are complete on the real line. (Note, however, that rationals are dense on the real line since “nearby” every irrational number is a corresponding rational number.

The real line (V = R1, d = || x-y ||p) forms a Banach space. By extension, V = RN, d = || x-y ||p forms a Banach space. Since any finite dimensional vector space can be mapped in a one to one fashion to RN, then:

Theorem: In a normed linear vector space, any finite-dimensional subspace is complete and hence forms a Banach space.

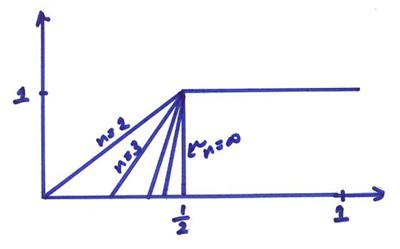

For an infinite sequence of

functions f(t) that are continuous on [0, 1] where ![]() and

and ![]() , consider a Cauchy sequence of functions

, consider a Cauchy sequence of functions

Since the convergence point is not a continuous function, this normed space is not complete and hence is not a Banach space. This space can, however, be made complete by adding certain discontinuous functions. We will then call this Lp[a,b] and this is a Banach space.

The infinite n-tuples

form a Banach space. This is called lp.

Note that care must be taken with infinite dimensional spaces to avoid pathological cases.

Now we need to introduce some concepts of directionality. Recall that the dot product of two physical vectors a and b is

![]() = | a | | b | cos

= | a | | b | cos

![]()

We can use this to define the “angle” between two vectors, where

![]()

The dot

product ![]() must be defined in

such a way that

must be defined in

such a way that

|

![]() | < | a | | b |

| < | a | | b |

We can use

this to define orthogonality of two vectors in a vector

space. For a general vector space, we

will call ![]() the inner product (a,b). The inner product maps x,y

the inner product (a,b). The inner product maps x,y![]() V into the scalars (real or complex) satisfying

(Note: the math notation is:

V into the scalars (real or complex) satisfying

(Note: the math notation is: ![]() or (a,b) and the physics notation

is <a,b> or <a êb>).

or (a,b) and the physics notation

is <a,b> or <a êb>).

1) (x,y) = (y,x)* (* is here just the complex conjugation).

2) (x + y,z) = (x,z) + (y,z).

3) (ax,z) = a(x,z). (properties 2 and 3 define the linearity of the inner product)

3’) (x,az) = a*(x,z).

4) (x,x) > 0 for x ![]() 0 and (x,x) = 0 for x = 0.

0 and (x,x) = 0 for x = 0.

(V,(x,y)) forms an Inner Product Space.

A suitable norm in an inner product space is:

![]()

This is an induced norm from the defined inner product. With this norm, then we can show Schwarz’s inequality

![]() .

.

Equality

holds for x = ![]() y or x = 0.

y or x = 0.

The angle between two vectors is defined as

Ex) In

RN, let ![]() An

induced norm from the inner product is then

An

induced norm from the inner product is then

.

.

We could also define other norms, for example

where Q is a positive definite operator such that

x* Q x > 0

for an arbitrary x (where) Q is Hermitian Q* = Q (to be defined a little later on).

Ex) For V = C[a,b], define the inner product to be

![]()

or a more general definition is

![]()

where

K(t,s) = K*(s,t)

A complete inner product space is called a Hilbert space (recall all finite dimensional spaces are complete by virtue of the completeness of R1 and hence RN).

Define two vectors to be orthogonal if (x,y) = 0. ![]() = 90° implies that x

is perpendicular to y. Note by changing the inner product by a change

in Q, we can change the definition of the angles between vectors.

= 90° implies that x

is perpendicular to y. Note by changing the inner product by a change

in Q, we can change the definition of the angles between vectors.

A set of vectors s1, …, sk are orthogonal if the vectors are pair-wise orthogonal.

A vector x is said to be orthogonal to a subspace S if x is perpendicular to any y in S.

Subspace S1 is perpendicular to S2 if x

is perpendicular to y where x ![]() S1 and y

S1 and y

![]() S2. Let S be any

subspace in V, then

S2. Let S be any

subspace in V, then

Sperp

= (v ![]() V | v is perpendicular to S).

V | v is perpendicular to S).

This is

called the orthogonal complement of S.