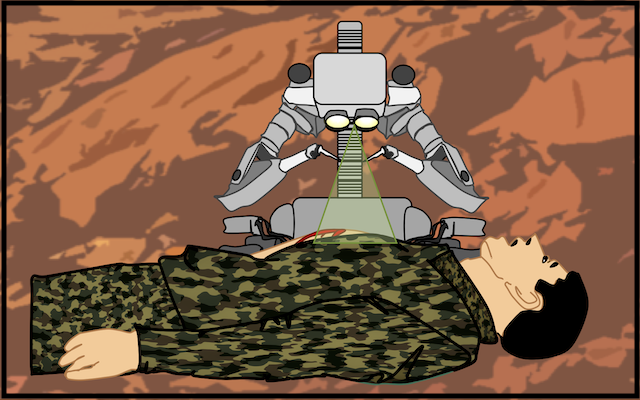

Our objective is to develop theoretical framework for supervised autonomy, capable to self-adjust its autonomous behavior and perform procedures in never seen settings using a transfer learning paradigm. Our working hypothesis is that an existing procedure can be adapted to a new domain using an encoding scheme to restore supervisory content combined with a one shot learning framework.

Sample paper:Rahman MM, Sanchez-Tamayo N, Gonzalez G, Agarwal M, Aggarwal V, Voyles RM, Xue Y, Wachs J. Transferring Dexterous Surgical Skill Knowledge between Robots for Semi-autonomous Teleoperation In 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) 2019 Oct 14 (pp. 1-6).

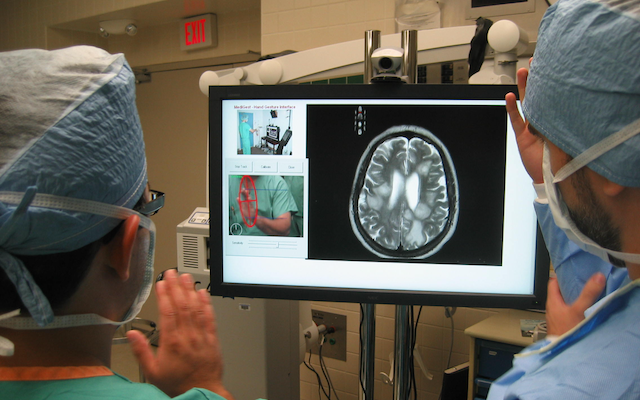

The use of keyboards and mice in the surgical setting can compromise sterility and spread infection. Instead, interfaces based on natural means of communication, such as gestures, have been suggested to address this problem. This research proposes an analytical and systematic approach for the design of gesture lexicons for the operating room.

Sample paper:Madapana N, Gonzalez G, Rodgers R, Zhang L, Wachs JP (2018) Gestures for Picture Archiving and Communication Systems (PACS) operation in the operating room: Is there any standard? PLoS ONE 13(6): e0198092.

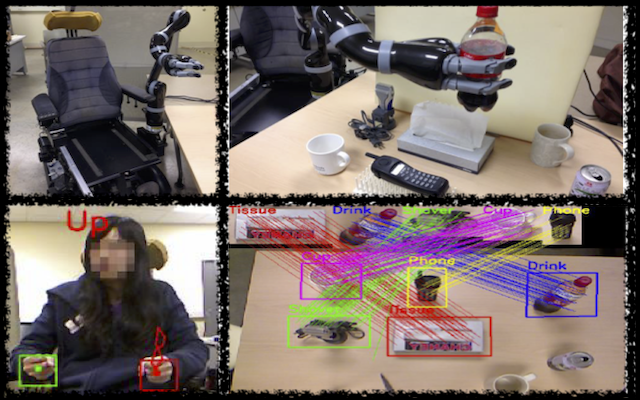

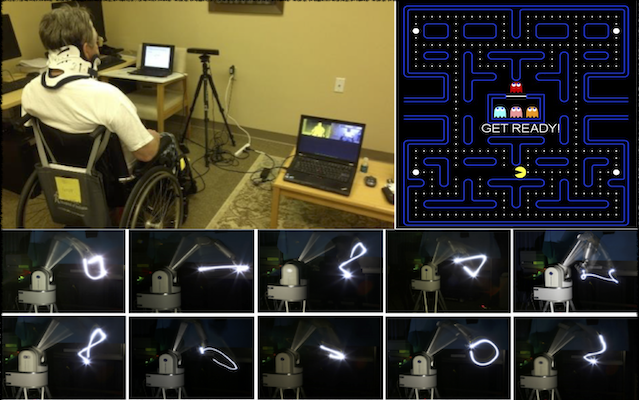

We developed an integrated, computer vision-based system to operate a commercial wheelchair-mounted robotic manipulator (WMRM). In addition, a gesture recognition interface system was developed specially for individuals with upper-level spinal cord injuries including object tracking and face recognition, hands-free WMRM controller

Sample paper:Jiang, H., Duerstock, B. S., and Wachs, J. P. (2013). A machine vision-based gestural interface for people with upper extremity physical impairments IEEE Transactions on Systems, Man, and Cybernetics: Systems, 44(5), 630-641.

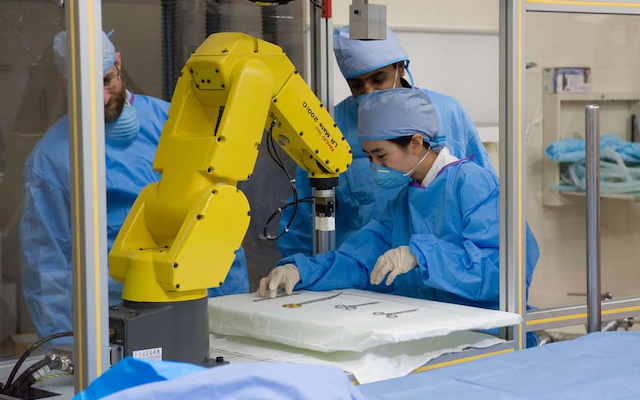

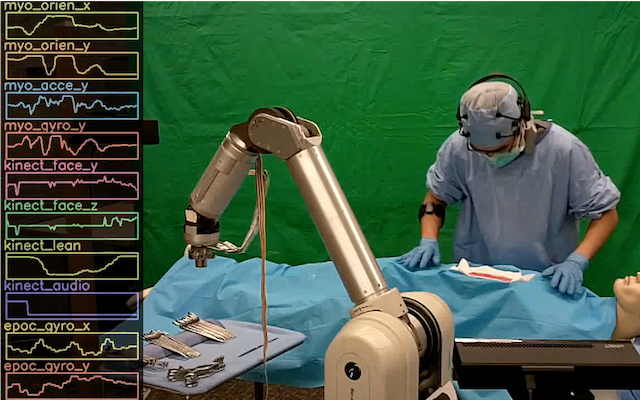

This is an automated solution to anticipate/detect a surgeon’s requests without requiring the surgeon to alter her behavior or other re-training. This automated solution prevents many communication failures in the OR. To address this problem, a holistic approach that considers both variability of surgical teams and instrumentation, surgeons’ verbal and nonverbal communication, and the context of the surgery is developed.

Sample paper:Jacob M, Li YT, Akingba G, Wachs JP. Gestonurse: a robotic surgical nurse for handling surgical instruments in the operating room. Journal of Robotic Surgery. 2012 Mar 1;6(1):53-63.

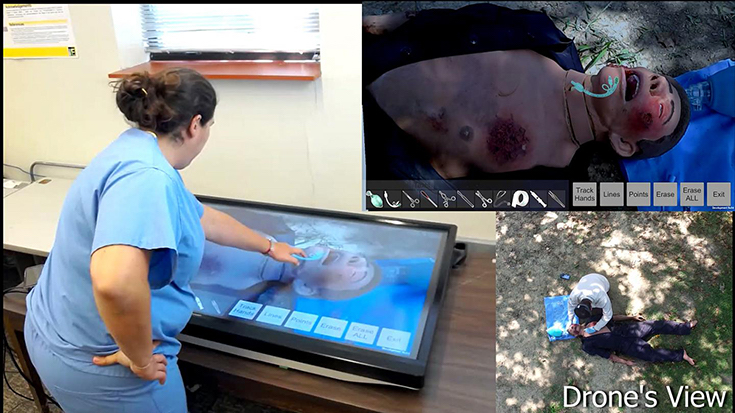

Develop and assess a System for Telementoring with Augmented Reality (STAR) that allows increasing the mentor and trainee sense of co-presence through an augmented visual channel. The technology uses see-through glasses and augmented reality for surgical annotations.

Rojas-Muñoz E, Cabrera ME, Andersen D, Popescu V, Marley S, Mullis B, Zarzaur B, Wachs J. Surgical telementoring without encumbrance: a comparative study of see-through augmented reality-based approaches. Annals of surgery. 2019 Aug 1;270(2):384-9.

The objective of this work is to include gesture variability analysis into the existing framework using robotics as an additional validation framework. Based on this, a physical metric (referred as work) was empirically obtained to compare the physical effort of each gesture.The voacbularies created were tested in a Pac-Man Game.

Sample paper:Jiang H, Duerstock BS, Wachs JP. User-centered and analytic-based approaches to generate usable gestures for individuals with quadriplegia. IEEE Transactions on Human-Machine Systems. 2015 Nov 20;46(3):460-6.

In addition to its main application, the theories and technologies involved in this proposal impact other fields in which dexterity and tactile feedback is key for successful task completion, such as tele-surgery. A unique feature of this project is a set of bimanual tool tips, equipped with multi-sensory devices for collecting tactile, force and chemical composition information for target characterization and action.

Sample paper:Xiao C, Wachs J. Triangle-Net: Towards Robustness in Point Cloud Classification. arXiv preprint arXiv:2003.00856. 2020 Feb 27.

We present the Turn-Taking Spiking Neural Network (TTSNet), which is a cognitive model to perform early turn-taking prediction about a human or agent’s intentions. The TTSNet framework relies on implicit and explicit multimodal communication cues (physical, neurological and physiological) to be able to predict when the turn-taking event will occur in a robust and unambiguous fashion.

Zhou T, Wachs JP. Spiking Neural Networks for early prediction in human–robot collaboration. The International Journal of Robotics Research. 2019 Dec; 38(14):1619-43.

This research developed a realtime multimodal user interface that conveys visual information to the blind through haptics, auditory and vibrational feedback. It can also give the users exploratory strategies based on their exploration behaviors to help them understand the image more accurate and more efficient.

Sample paper:Zhang, T; B. S. Duerstock, and Wachs, JP. 2017. Multimodal Perception of Histological Images for Persons Who Are Blind or Visually Impaired. ACM Trans. Access. Comput. 9, 3, Article 7 (February 2017).

(Currently Program Director at NSF)

University Faculty Scholar

Regenstrief Center for

Healthcare Engineering

Adjunct Professor of Surgery

IU School of Medicine

Professor of Biomedical

Engineering (by courtesy)

School of Industrial Engineering

School of Industrial Engineering

Purdue University

nmadapan{at}purdue{dot}edu

School of Industrial Engineering

Purdue University

xiao237{at}purdue{dot}edu

School of Industrial Engineering

Purdue University

edtec217{at}gmail{dot}com

School of Industrial Engineering

Purdue University

gonza337{at}purdue{dot}edu

School of Industrial Engineering

Purdue University

juliet.tingcheung{at}gmail{dot}com

School of Industrial Engineering

Purdue University

barragan{at}gmail{dot}com

School of Electrical and Computer Engineering

Purdue University

zhan3275{at}purdue{dot}edu

School of Industrial Engineering

Purdue University

n.sanchez3330{at}gmail{dot}com

School of Industrial Engineering

Purdue University

cenebech{at}gmail{dot}com

School of Industrial Engineering

Purdue University

dchancia{at}purdue{dot}edu

School of Industrial Engineering

Purdue University

sanch174{at}gmail{dot}com

Our vision is to enable robots to understand the variability and range of human motions and gestures (including physical constraints) to support patient rehabilitation (instead of the other way around), to facilitate work with machines, and to improve surgical outcomes in the Operating Room through medical robotics.

We are currently working on fundamental new problems in the intersection between robotics and human AI interaction. I particularly want to study new AI and data science based paradigms that can help transfer learning from controlled settings to uncontrolled/austere scenarios. Of special interest are transfer learning theories that explore the ability of machines to recognize actions and execute them effectively from observing few instances of it.

These are brief quotes of media apperances of our work at ISAT labs.

"Surgeons of the future might use a system that recognizes hand gestures as commands to control a robotic scrub nurse or tell a computer to display medical images of the patient during an operation."

Science Daily

Read More

Science Daily

Read More

"That’s Dr. Juan Wachs, the professor who helped design this, a machine that’s also good at preventing what he calls “retained instruments.” Retained, as in surgical tools being sewed up inside patients by mistake."

NPR Marketplace

Read More

NPR Marketplace

Read More

"Purdue University researchers are developing a gesture-driven robotic scrub nurse prototype that may one day relieve the nurse of some of her technical duties or replace the scrub technician"

Fox News

Read More

Fox News

Read More

" Blind people ‘see’ microscope images using touch-feedback device ."

NewScientist

Read More

NewScientist

Read More

"He and his team are developing devices like augmented reality lens to allow surgeons to collaborate via hologram-like visuals in real time from afar, AI-imbued robots to assist doctors in operation. "

WIRED

Read More

WIRED

Read More

"Researchers are developing an “augmented reality telementoring” system that could provide effective support to surgeons on the battlefield from specialists thousands of miles away."

Futurity

Read More

Futurity

Read More

" Juan Wachs, an assistant professor at Purdue University who builds gestural interfaces to help surgeons work with robots in the operating room."

Perfil Noticias

Read More

Perfil Noticias

Read More

"Robots en el quirófano: el argentino pionero de la cirugía a distancia"

La Nacion

Read More

La Nacion

Read More

"Teleportation ala Star Trek—beaming a person place to place—remains science fiction, but a Purdue University researcher is developing a system for surgeons that he believes could be the next best thing."

Inside Indiana Business

Read More

Inside Indiana Business

Read More

Double-click the map to zoom in. Click and hold to drag.